Apprentissage machine

John Samuel

CPE Lyon

Year: 2022-2023

Email: john(dot)samuel(at)cpe(dot)fr

Soit

Alors

Prendre des décisions signifie appliquer tous les classificateurs à un échantillon invisible x et prédire l'étiquette k pour laquelle le classificateur correspondant rapporte le score de confiance le plus élevé : \[\hat{y} = \underset{k \in \{1 \ldots K\}}{\arg\!\max}\; f_k(x)\]

\[f(x)=x\]

\[f'(x)=1\]

\[f(x) = \begin{cases} 0 & \text{for } x < 0\\ 1 & \text{for } x \ge 0 \end{cases} \]

\[f'(x) = \begin{cases} 0 & \text{for } x \ne 0\\ ? & \text{for } x = 0\end{cases}\]

\[f(x)=\sigma(x)=\frac{1}{1+e^{-x}}\]

\[f'(x)=f(x)(1-f(x))\]

\[f(x)=\tanh(x)=\frac{(e^{x} - e^{-x})}{(e^{x} + e^{-x})}\]

\[f'(x)=1-f(x)^2\]

\[f(x) = \begin{cases} 0 & \text{for } x \le 0\\ x & \text{for } x > 0\end{cases} = \max\{0,x\}= x \textbf{1}_{x>0}\]

\[f'(x) = \begin{cases} 0 & \text{for } x \le 0\\ 1 & \text{for } x > 0\end{cases}\]

\[f(x)=e^{-x^2}\]

\[f'(x)=-2xe^{-x^2}\]

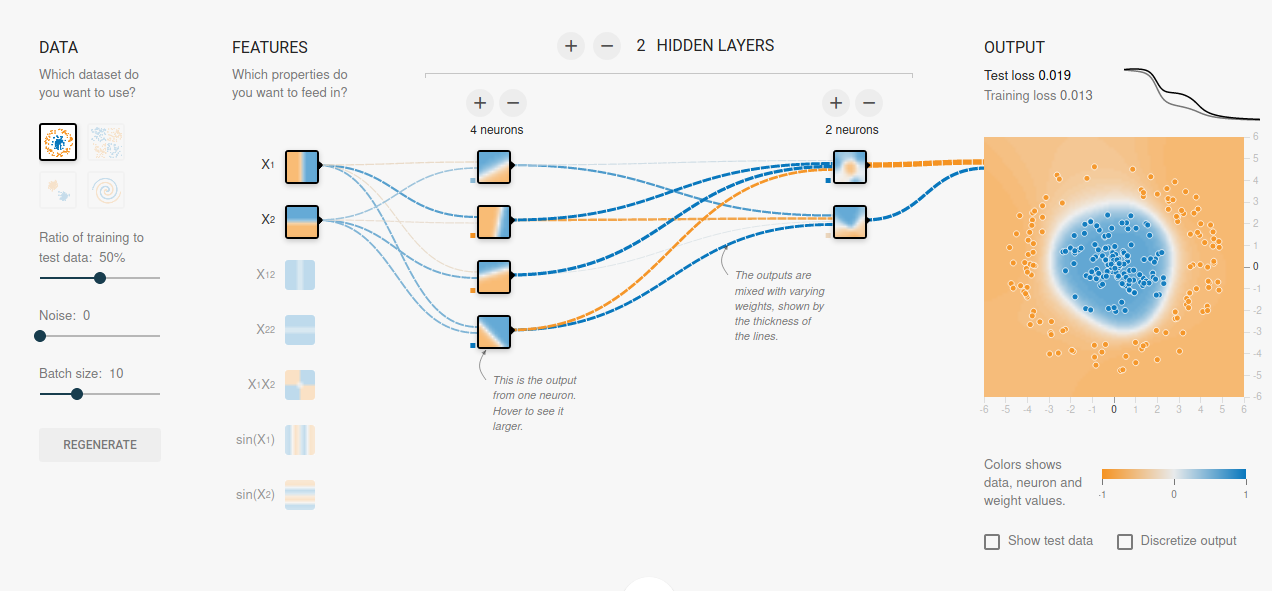

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import SGD

# Créer un modèle séquentiel

model = Sequential()

model.add(Dense(4, activation='relu', input_shape=(3,)))

model.add(Dense(units=2, activation='softmax'))

# Compilation du modèle

sgd = SGD(lr=0.01)

model.compile(loss='mean_squared_error',

optimizer=sgd,metrics=['accuracy'])

\( \begin{matrix} \ \ 0 &\ \ 0 &\ \ 0 \\ \ \ 0 &\ \ 1 &\ \ 0 \\ \ \ 0 &\ \ 0 &\ \ 0 \end{matrix} \)

\( \begin{matrix} \ \ 1 & 0 & -1 \\ \ \ 0 & 0 & \ \ 0 \\ -1 & 0 & \ \ 1 \end{matrix} \)

\( \frac{1}{9} \begin{matrix} 1 & 1 & 1 \\ 1 & 1 & 1 \\ 1 & 1 & 1 \end{matrix} \)

\( \frac{1}{16} \begin{matrix} 1 & 2 & 1 \\ 2 & 4 & 2 \\ 1 & 2 & 1 \end{matrix} \)

\[ \begin{bmatrix} x_{11} & x_{12} & \cdots & x_{1n} \\ x_{21} & x_{22} & \cdots & x_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ x_{m1} & x_{m2} & \cdots & x_{mn} \\ \end{bmatrix} * \begin{bmatrix} y_{11} & y_{12} & \cdots & y_{1n} \\ y_{21} & y_{22} & \cdots & y_{2n} \\ \vdots & \vdots & \ddots & \vdots \\ y_{m1} & y_{m2} & \cdots & y_{mn} \\ \end{bmatrix} = \sum^{m-1}_{i=0} \sum^{n-1}_{j=0} x_{(m-i)(n-j)} y_{(1+i)(1+j)} \]

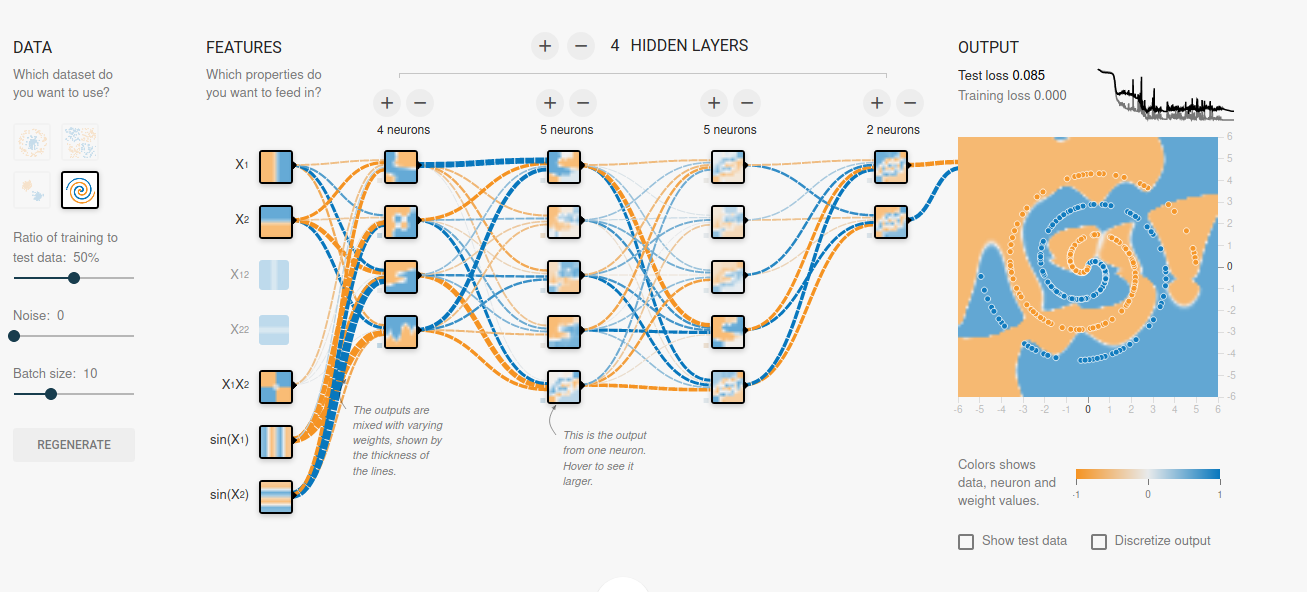

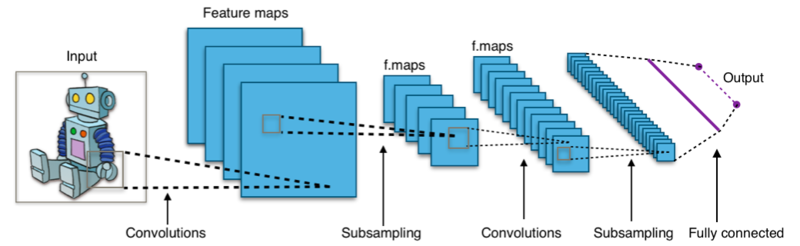

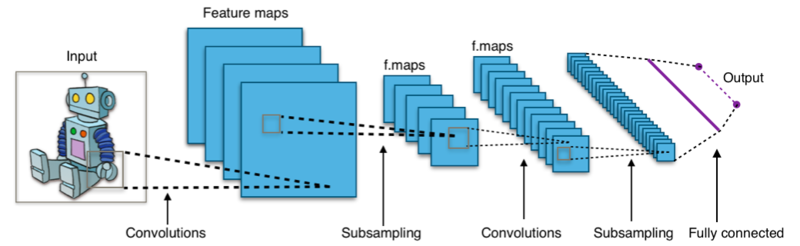

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

(train_images, train_labels), (test_images, test_labels) = datasets.cifar10.load_data()

train_images, test_images = train_images / 255.0, test_images / 255.0

# Créer un modèle séquentiel (réseaux de neurones convolutionnels)

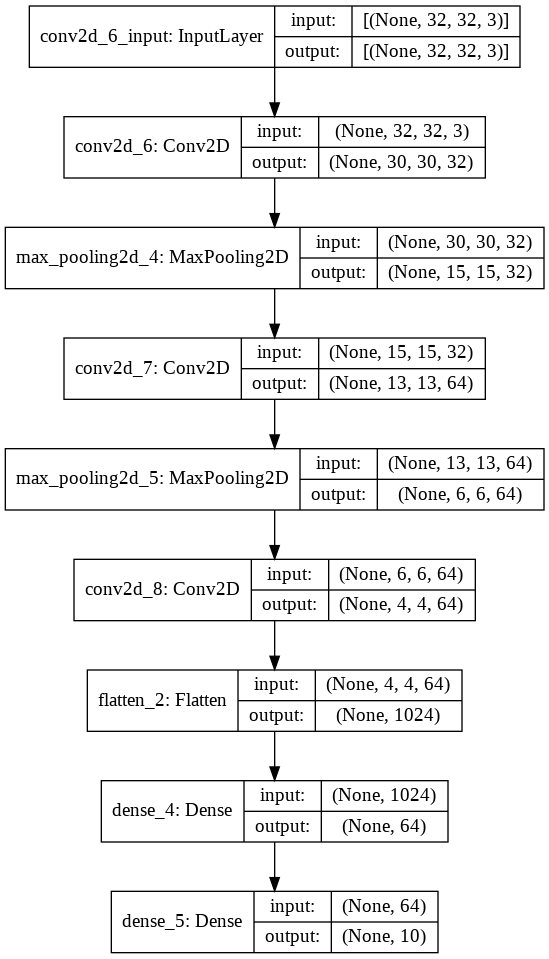

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(32, 32, 3)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10)

#Compilation du modèle

model.compile(optimizer='adam',

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

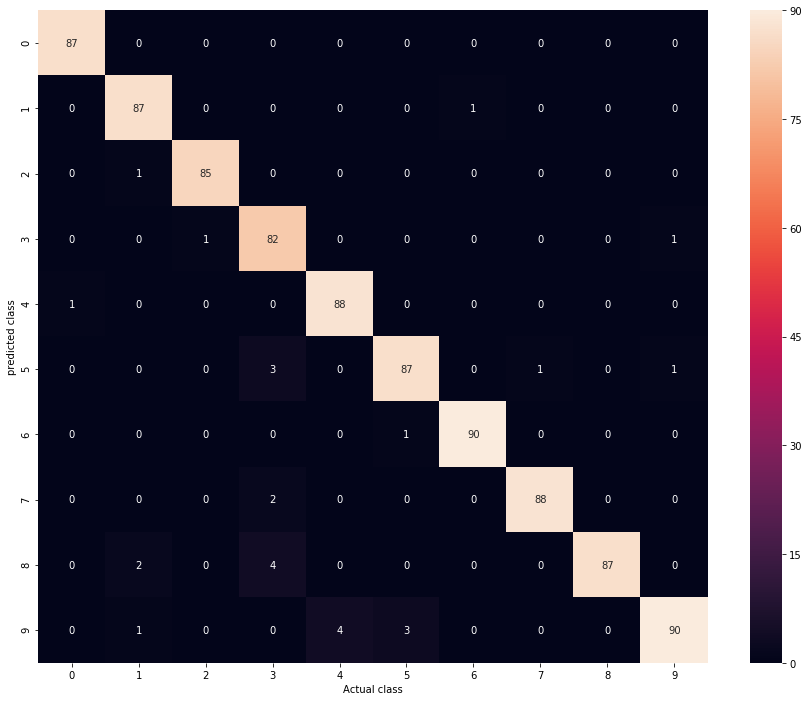

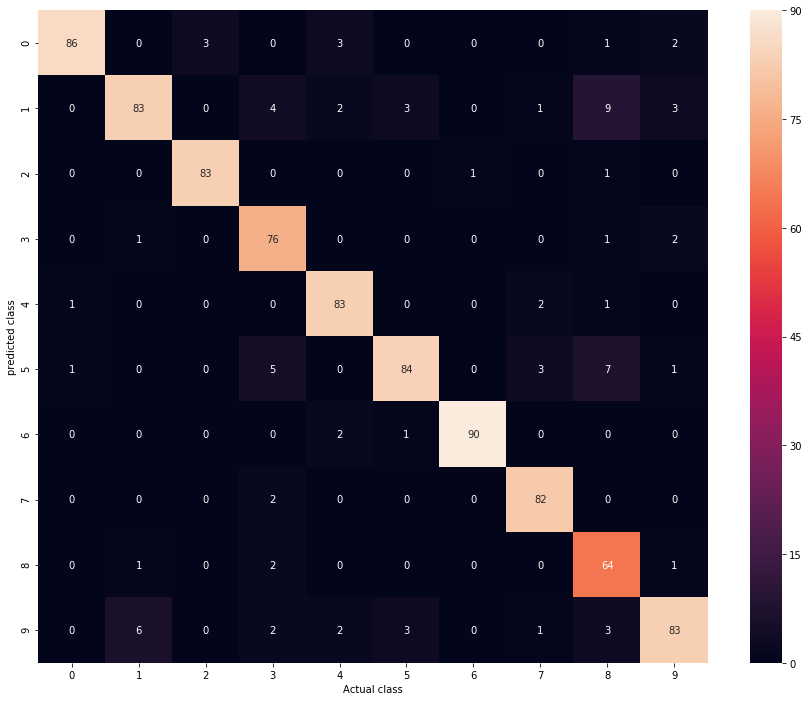

history = model.fit(train_images, train_labels, epochs=10,

validation_data=(test_images, test_labels))